# Algorithmic decisions shape our experience of the world

Helena Webb,

University of Oxford

Most of us now spend a large proportion of our lives online. In the UK, 88% of adults have Internet access and spend up to one day a week online – accessing sites at work, at home and whilst on the move. The most popular celebrities on social media have over 100 million followers and increasing numbers of users, in particular young people, access news information via social media rather than newspapers or television programmes. More and more, we rely on a small number of very powerful platforms to access the kinds of online content we want to see. According to Facebook, 1.45 billion Internet users log on to the social network at least once a day and 2.2 billion are active at least once a month. Google processes on average 40 000 search queries per second, and a total of 3.5 billion queries every day. Given our high use of, and reliance on, the Internet it is very important that we keep asking questions to make sure that the online sites we access, and the platforms that drive them, are acting in our best interests.

Over the last few years there have been increasing concerns over the impacts of automated decision making on our online experiences. Whenever we browse online, algorithms shape what we see by filtering and sometimes personalising content. For instance, when we enter a query into an online search engine, algorithmic processes determine the results we see and the order of those results. Similarly, when we look on Facebook and other social networks, personalisation algorithms operate to determine the advertisements and posts we see in our individual feeds. These processes can be very useful to us; they save us immense amounts of time by leading us straight to content that we are likely to find relevant and interesting.

Why are people concerned?

However, there are also concerns that these processes can lead to outcomes that are unfavourable, unfair or even discriminatory.

-

Personalisation algorithms can be irritating when they cause us to see advertisements for products that we have already bought or don’t want. They also rely on the collection of personal data, and individuals can sometimes feel unnerved by how much personal information about them is being collated, feeling it to be an invasion of privacy. Users are also often unaware of how much personal data is being collected when they browse, and how it is shared with unknown third parties – as seen in the recent scandal over the activities of Cambridge Analytica.

-

The results of the EU Referendum in the UK and the US Presidential election in 2016 led to much debate over the roles played by social media during democratic campaigns. Some have argued that the algorithmic processes that determine the content of our news feeds can be manipulated so that we are exposed to a steady stream of fake news or misleading content. Others have expressed concerns that personalisation mechanisms can place users within ‘filter bubbles’ in which they are only shown content they are likely to already agree with. This means they are not challenged to consider alternative viewpoints and political stances.

-

Controversies have also arisen over the results of queries into search engines. For instance, a search for images of ‘female football fans’, ‘unprofessional hairstyles’ or ‘3 black teenagers’ might lead to results that reflect common, derogatory stereotypes of gender and race. Google and other search platforms point out that these results occur because the online content their algorithms work on is already skewed, rather than the algorithms themselves being biased. Nevertheless, given the massive number of searches made by online users every day, there is a risk that these kinds of results inadvertently reinforce societal prejudices.

What is the UnBias project all about?

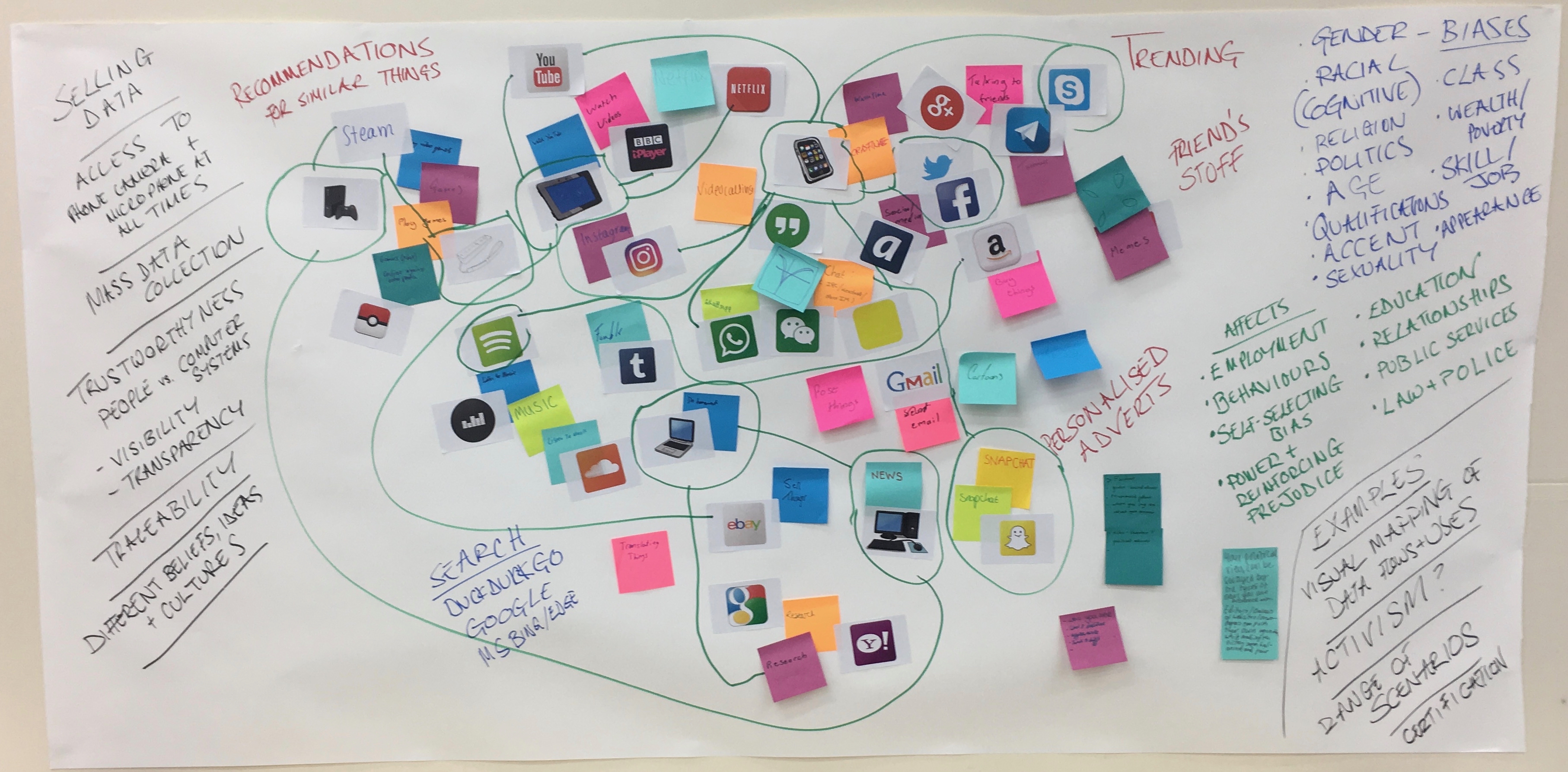

Mind map produced by young people at an UnBias workshop exploring the everyday ecology of apps, services, the potential for bias and its effects.

Mind map produced by young people at an UnBias workshop exploring the everyday ecology of apps, services, the potential for bias and its effects.

Our UnBias project explores these various concerns arising from the contemporary online prevalence of algorithms. Our research team combines social scientists and computer scientists from the Universities of Oxford, Nottingham and Edinburgh. The aim of our project is to explore the user experience of algorithm driven Internet platforms and the processes of algorithm design. We recognise that algorithmic prevalence is an ethical issue and ask how we can judge the trustworthiness and fairness of systems that rely heavily on algorithms. Our research activities include:

-

fun, interactive ‘youth jury’ sessions to elicit the perspective and concerns of young Internet users

-

observations of web browsing and the ways in which algorithmic processes shape the online experience

-

experiments and surveys to explore the capacity for the identification and operation of ‘fair’ algorithms

-

engagement activities with stakeholders including industry professionals, policy makers, educators, NGOs, and online users.

Through these project activities we have identified genuine worries amongst different user groups that the contemporary online experience can sometimes be unfair. In keeping with the kinds of concerns described above, our participants have told us that the algorithmic processes driving online platforms can invade user privacy and influence understanding in negative ways. These problems can be exacerbated by other factors. Users often lack awareness of the ways in which algorithms operate to filter and personalise the online content they see; even when they are aware, they can also feel powerless to make changes to improve the online experience. In addition, the algorithms used by Facebook and Google etc. are considered commercially sensitive and therefore not made available for open inspection. In any case, they are also highly technically complex and difficult for most of us to understand.

We are using the findings of our UnBias project to promote fairness online. We are carrying out various activities to support user understanding about online environments, raise awareness among online providers about the concerns and rights of internet users, and generate debate about the ‘fair’ operation of algorithms in modern life. Our project will also produce policy recommendations, educational materials and a ‘fairness toolkit’ to promote public civic dialogue about how algorithms shape online experiences and how issues of online unfairness might be addressed. We are delighted to take part in the Digital Design weekend to showcase our work and help empower Internet users to seek a better, fairer online experience.

‘UnBias: Emancipating Users Against Algorithmic Biases for a Trusted Digital Economy’ is an ongoing research project funded by the Engineering and Physical Sciences Research Council. It runs from September 2016 to November 2018. The project is led by the University of Nottingham in collaboration with the Universities of Oxford and Edinburgh. The project team are:

University of Nottingham: Professor Derek McAuley, Dr Ansgar Koene, Dr Elvira Perez Vallejos, Dr Helen Creswick, Dr Virginia Portillo, Monica Cano, Dr Liz Dowthwaite

University of Edinburgh: Dr Michael Rovatsos, Dr Alan Davoust

University of Oxford: Professor Marina Jirotka, Dr Helena Webb, Dr Menisha Patel

Proboscis: Giles Lane

For more information about the project see: https://unbias.wp.horizon.ac.uk/